Basement Blockbusters, IP Generators, and the future of film creation

What a combined experiment with Claude, ChatGPT, Perplexity, Suno, ElevenLabs, Midjourney, Luma, and Runway taught me about the future of storytelling

In our previous exploration, we looked at what disruption holds for the media industry across filmmaking, gaming, advertising, and social media. We focused on how the barriers to high-quality content creation are crumbling under the weight of Generative AI's relentless advance. We also outlined how a zero-cost production era will slash budgets and potentially democratize visual storytelling.

In the next few posts, I will do some deep dives into each part of the media industry, starting with film and movies, to unpack what disruption will look like across each area. I want to do this through some experimentation using as many current tools as possible. For this post, I was able to use GPT-4o, Claude 3.5 Sonnet, Perplexity, Midjourney 6.1, Runway Gen-3 Alpha, Luma AI, Suno, and Elevenlabs. The reason for this seemingly gratuitous use of gen AI models is to build minimum viable products that demonstrate how the future of content creation is already being realized.

In this post, we’ll roll up our sleeves and create a cinematic universe from scratch, conceptualizing the IP, generating scripts, designing characters and environments, visualizing key scenes, producing a teaser trailer, and thinking through what the end-consumer product could look like via a “generative content ecosystem”. This hands-on approach will show exactly what's possible today, but more importantly, it will offer an idea of what's coming very soon. Alright, let’s do it.

First, a framework for reference

I want to start by outlining the key stages in the film creation value chain, from initial concept to final outputs. Once we ground ourselves here, we can start to unpack how gen AI can impact this process now and how it will impact it in the future.

A quick note: I've intentionally stripped away the logistical and financial components of the traditional film industry value chain below. While elements like financing, production management, and distribution are crucial to the overall filmmaking process, and generative AI will certainly disrupt them, our focus here is squarely on content creation.

As you can see, the film creation process is dense. It spans from the initial spark of an idea to the final product reaching audiences worldwide. The framework above outlines five key phases: Development, Pre-Production, Production, Post-Production, and Distribution. Each phase encompasses critical tasks that collectively bring a cinematic vision to life.

The intricacy and resource-intensive nature of this process make it ripe for gen AI disruption. The traditional barriers of high costs, specialized skills, and extensive time investments are being challenged by generative AI’s capabilities to generate massive amounts of content, iterate, and democratize the high-value outputs critical to filmmaking.

As mentioned before, we will address most of this value chain in this paper, but I want to primarily focus on three areas: Development, Production, and Distribution. These phases represent the bookends and core of the filmmaking process, where generative AI's impact is poised to be most transformative early on. From AI-assisted world-building and character development to AI-generated visual effects and distribution ecosystems, we'll explore how these technologies reshape the cinema creation and consumption landscape.

The Development Phase

The current Development Phase is a time-intensive, often solitary process. Screenwriters and creative teams spend months, sometimes years, developing concepts, building worlds, acquiring them, and working within them to craft characters, and structure narratives. This phase is crucial but often fraught with uncertainty, as creators struggle to predict what will resonate with audiences and studios.

World Building with Claude 3.5 Sonnet

Current frontier models like Claude 3.5 Sonnet and ChatGPT-4o can demonstrate how this process can be augmented in a stunningly fast and compelling way. To show how this works, I started from scratch. I gave a simple request to Claude 3.5 Sonnet to help me develop a fantasy universe, asking it to think like great fantasy writers and help guide me through the process.

Fantasy may not be your favorite genre, but it’s a really interesting place to explore world-building. Here are some snippets of the world I created using Claude.

After about 3 hours I had generated over 80 pages of structured content for defining this world. The sheer amount it was able to generate was impressive, but the intricate detail was what impressed me. The snippets of content below give you a sense of just how comprehensive it was. The Artifacts feature was incredibly helpful in organizing and scaling the substantial amount of content needed to build the world.

Creating a Fantasy Generator

Now that I’d created all of this background to build the world, I synthesized all of it into some structured Word docs and fed them into a Project in Claude.

Impact on the Development Phase

So, I now have a tool that intimately knows the world I want to build and now I can just have a conversation with it to generate any story, character, environment, visual treatment, and environment I want.

This type of IP Generator is, of course, only a minimum viable product, but it shows us where the development phase of content creation could lead. First is the unprecedented ability to create scalable content. Studios or individual creators can rapidly build and expand on content within a given world or universe in ways that were unfathomable pre-gen AI.

This scalability will be extremely important when it comes to mitigating risk for bigger production efforts. Studios will be able to test and iterate on multiple concepts within an established IP framework, potentially reducing the high failure rates that have long plagued the industry. The potential for adaptive storytelling opens new frontiers in audience engagement, enabling content to evolve based on viewer feedback and market trends, possibly revolutionizing how stories are told and consumed.

This “IP Generator” concept will also enhance collaboration among creative teams, serving as a shared creative portal that streamlines the often-cumbersome pre-production process. Together, IP Generators not only augment existing practices but fundamentally reshape the approach to content creation and IP management, promising a more efficient, responsive, and profitable future for entertainment production.

A few more examples of the IP Generator’s output:

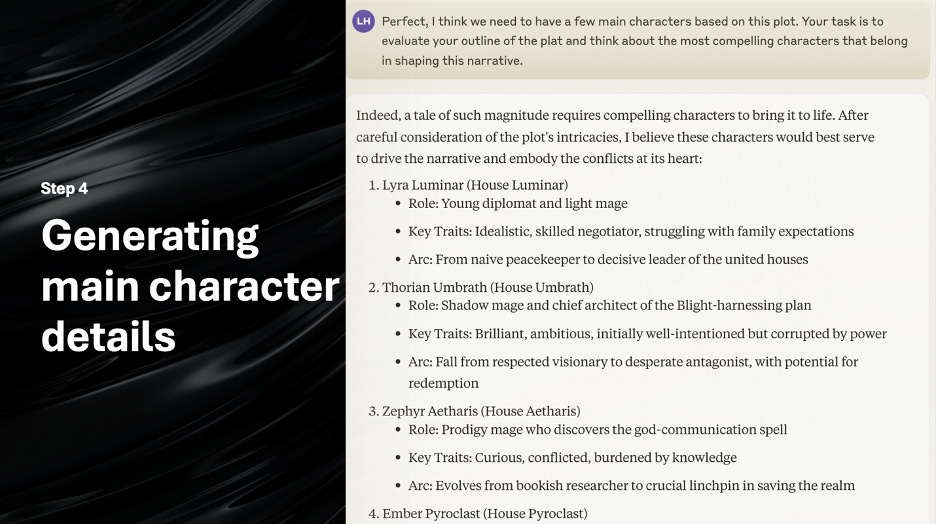

Using the IP Generator, I asked it to create an outline for a story within the world, focusing on a character-driven approach. It outlined several characters and a decent plot for a main story:

Finally, I asked it to generate Artifacts in the form of character-driven scripts to show how the basic plot progresses, the key decisions, and the general arc of the story:

The Production Phase

Now that we have our IP generator and a working script, we can move to the Production Phase. The Production Phase in filmmaking is a complex, resource-intensive process, often hindered by ever-increasing expenses and lengthy timelines that don’t align with desired deadlines. This phase traditionally encompasses everything from principal photography to set design, costume creation, and special effects – all requiring significant investments in time, talent, and financial resources.

Despite technological advancements, many aspects of production remain stubbornly rooted in methodologies developed decades ago. The result is often a process plagued by inefficiencies, with ballooning costs that can make or break a project before it even reaches audiences.

In the following sections, we'll explore two key areas as examples of this potential disruption:

Character Art Creation: We'll demonstrate how AI can rapidly generate and iterate on character designs (also costume creation and set design), potentially compressing months of work into days or even hours.

Content Development: We'll showcase how generative AI video can create compelling scenes and scenarios, potentially transforming the content creation to a wholly augmented approach in some cases.

Let’s pick back up the fantasy world we’ve been building. After generating a script and asking for some in-depth descriptions of the key characters, I asked Claude to develop character profile descriptions that aligned with Midjourney's best practices. I then took those descriptions and put them into my ChatGPT “Midjourney Generator” tool to create refined prompts. Here’s the output:

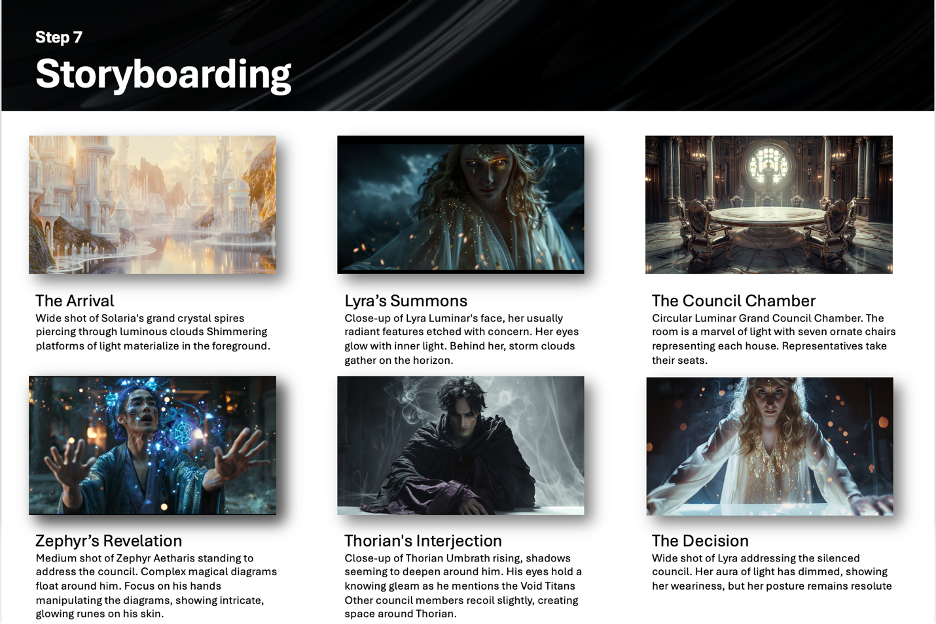

Using a very similar process, I did the same exercise to develop storyboards for what a 30-second trailer could look like:

Then, using Luma Labs and Runway 3 Alpha for visuals, I was able to create this trailer by bringing Midjourney-generated concept art to life. I also used the IP Generator tool to help me create key frame recommendations needed, develop best practice-informed prompts for a theme song using the text-to-music app Suno, and a voice-over script to be read by ElevenLabs AI. It was truly a “use all tools” experiment. Everything you see here is AI-generated, the only manual work needed was to stitch it together in iMovie:

The Distribution Phase

The film industry, and media industry more broadly, are in for a dramatic transformation. From this exercise, we can see that the way we create, consume, and interact with content is going to face serious disruption in the coming months and years. The experiments we've run, from building an entire fantasy universe to generating character designs and trailers, are a window into a future that's rapidly hurtling toward us. Let's unpack the implications for distribution.

First off, we're looking at a future where the barriers to creating rich, complex IP universes are practically obliterated. Think about it – we built an entire fantasy world, complete with its history, magic system, and character arcs, in a matter of hours. Now, imagine this capability in the hands of everyone from big-budget studio execs to that kid with a smartphone and a wild imagination… and all her friends collaborating. We're talking about a democratization of storytelling on a scale we've never seen before. What does this do to blockbuster films and streaming platforms?

These traditional gatekeepers are about to face a tidal wave of grassroots creativity. We're likely to see the rise of micro-universes, each with its own dedicated following. These will potentially be fully realized, multi-media IP ecosystems. The line between professional and amateur creators? It's about to get real blurry, real fast.

Similar to the type of disruption that streaming platforms had on distribution, I believe we will witness an entirely new digital ecosystem that will bring these generative models together into a simple user interface with similar mechanics that underly current social media platforms. This ecosystem will consist of users, creators, and even production studios building, sharing, and interacting with entire worlds and sub-worlds of generative content.

Our experiment showed how quickly we could generate everything from character designs to video content. Now, imagine that speed and flexibility in a platform where content can be created, shared, and iterated upon in near real-time with friends, niche community members, and fans of larger IP ecosystems a la Star Wars or MCU.

This is where the traditional boundaries between different forms of media start to break down and the linear distribution process begins to blow up. Our little experiment shows how seamlessly we could move between text, images, video, and audio. Creation will become collaborative and scaled to a near-exponential level. In this new content ecosystem, the distinctions between films, TV shows, games, and social media content become increasingly overlapping. We're looking at the emergence of new, hybrid media forms that blur the rigid linear elements of all these traditional categories.

The implications here are huge. Content consumption would become a much more active, participatory experience. Audiences won't just be passive viewers, far from it. They'll be collaborators, contributing to and shaping the narratives they engage with. We might see the rise of "living narratives" that evolve based on community interaction and AI-generated expansions. Forget binge-watching a series – imagine diving into a story world where your actions and choices influence the direction of the plot.

Of course, there are some serious challenges to grapple with. Quality control in a world of AI-generated content is going to be a major issue. Copyright and ownership? That's a whole can of worms studios would need to figure out. And let's not forget about the potential for information overload – with so many universes to explore, discovery and curation are going to be more crucial than ever.

There's also the question of authenticity and the human touch. As amazing as AI is, there's still something special about a story crafted by human hands and fueled by human experiences. Finding the right balance between AI assistance and human creativity is going to be key.

But these challenges, as daunting as they might seem, are really just opportunities in disguise. The future of film and content creation is finally going to be participatory. Ever-evolving narrative universes will blur the lines between creator and consumer.

The most successful creators and platforms in this new world will be those that can harness the power of AI to enhance human creativity and collaboration, foster genuine community engagement, and navigate the ethical and legal complexities of this new paradigm.

We humans are hard-wired to make sense of the world through stories, and as these technologies proliferate, it only makes sense that we will experience a fundamental shift in how we tell stories, how we connect, and how we understand the world around us.

It's going to be one hell of a ride.

Credit: I want to give a big shoutout to Kes Sampanthar for all the inspiration and ideation around the future of media.