Taking Back Control of Our Information Diet

GenAI will be our main weapon in the fight against misinformation and content overload.

It’s no secret we're constantly inundated with never-ending streams of content. While the prevailing assumption was that access to information would lead to a more informed society, it appears that instead, our society is more “information illiterate” and misinformed than ever before. But it’s not surprising given the amount of content we need to consume to truly be “informed citizens”. The battle is unwinnable when done alone and it begs some major questions for our near future:

Can we create transparency in privacy and terms of services?

Can we curate and prioritize our information diet?

Can we mitigate the erosion of trust in the content we consume?

My hypothesis: GenAI’s ability to synthesize, analyze, vet, curate, and structure our content will save us from the flood of information and misinformation we deal with every day, and the coming flood of AI-generated content.

Just imagine, instead of manually clicking around through inboxes, feeds, queues, and search engines; we will have AI assistants (agents) that will prioritize our content for us based on our needs, wishes, and desires. A little dystopian sounding, but bear with me here. GenAI could soon be the mechanism that will manage information overload; transforming how we process, understand, and engage with information.

While ChatGPT, specifically GPTs, are an incredibly powerful tool, they lack the full potential of what future semi-autonomous agents will be able to achieve for us. But GPTs give us a good signal of what the near future will look like. We can even build GPTs as a minimum viable product to showcase this near-future potential and how it might work.

Let’s look at a few areas where GenAI can help us combat information overload and information illiteracy:

Part 1: You don’t have to read the terms of service anymore (not that you, or anyone else, did anyway).

It would take the average person about 30 working days each year to read all of the terms of service agreements and privacy policies they agree to each year. I know, ridiculous. Let's face it, mundane legal documents are not something we want to (or have time to read).

These documents are dense, filled with complex jargon, and frankly, not designed for non-lawyer comprehension. But as most of us know, accepting these terms of service comes at a cost:

Data Privacy: Ever wonder what happens to your data when you click 'Agree'? GenAI can cut through the legalese, highlighting what data is collected, how it's used, and what control you have over it.

User Rights: What rights are you signing away with a simple click? Often, user agreements have clauses that limit liability, dictate dispute resolution methods, or outline service use conditions. GenAI can demystify these sections, ensuring users are aware of their rights and limitations.

Service Limitations: Ever been surprised by a service restriction or an unexpected fee? That's usually buried in the fine print. GenAI can illuminate these caveats, helping users understand service boundaries and financial obligations upfront.

Using the GPT Legal Assistant I developed, we can see how this starts to take shape.

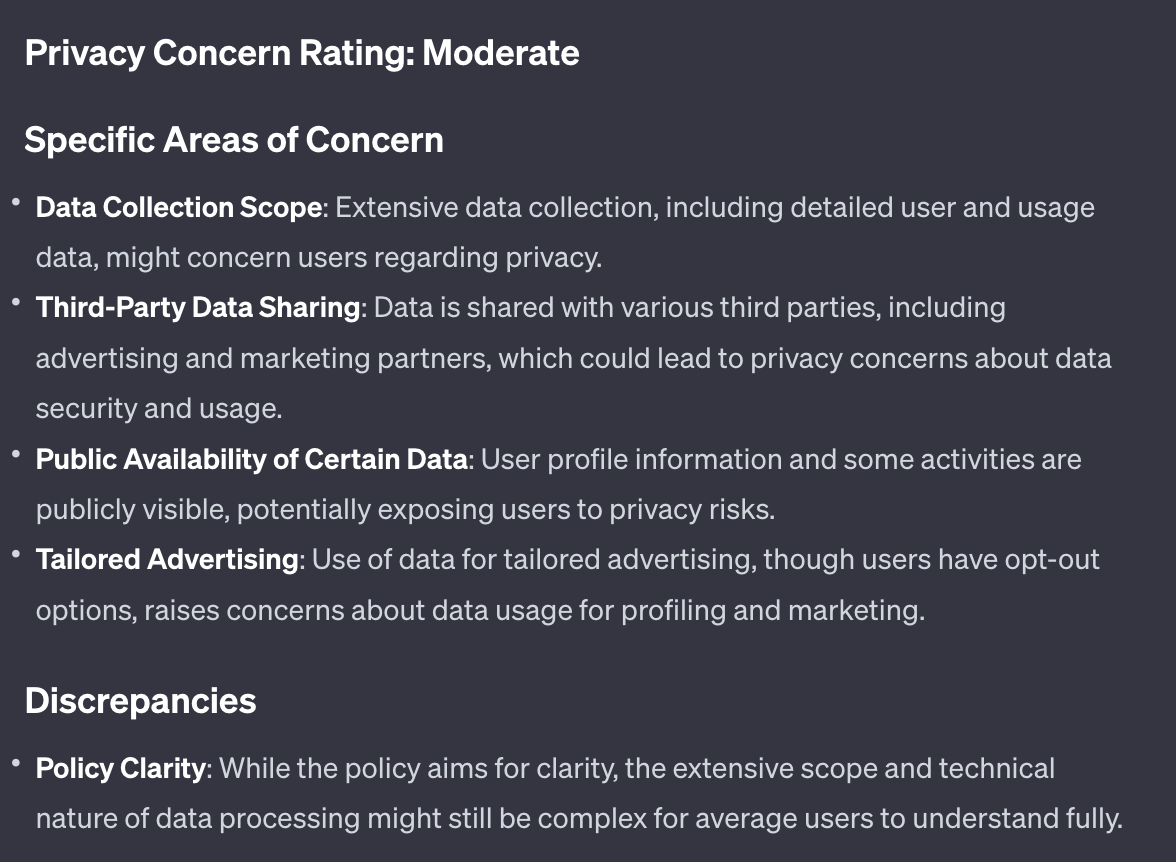

Privacy Protector analyzes Terms of Service Agreements, Privacy Policies, and other legal documents with a focus on privacy concerns. The GPT can follow hyperlinks, read PDFs, or scan copied text and respond with:

A concise summary of the document (3-5 sentences).

A Privacy Concern rating is categorized as Low, Moderate, or High.

Identification of Specific Areas of Concern, outlined in structured bullet points with brief explanations.

Highlighting any Discrepancies in the document in a structured format.

Providing a Recommendation based on the assessment.

It identifies and articulates areas where privacy might be compromised and simplifies complex legal information into an easy-to-understand format for users.

Here is an example analyzing Spotify’s data privacy policy:

Part 2: Did you even read the proposed bill? (No, because who has time to read a freaking bill?)

I’m not going to go too far down the rabbit hole, but one of the biggest issues with our democracy is uninformed or misinformed citizens. I don’t blame citizens for this. The length of policies and bills grows each year. They bounce back and forth in Congress, growing in length, changing in shape and scope, ending up as long, complicated, and nearly impossible for the average citizen to assess. This leads to:

Uninformed Citizens: We simply don’t have time to learn everything that is happening in the government we are electing, so we look for shortcuts and trust influencers in the media to tell us where we should focus.

Lack of Transparency: By making legislative content less accessible, our government can push bills that ultimately end up hurting disadvantaged citizens.

Disillusionment and Mistrust: There’s a reason political candidates stick to simple speeches and hot-button issues, it’s dramatic and it makes us pay attention. This fosters polarization, disillusionment, and mistrust in the institution.

But GenAI will help. Soon, we will have tools that can help us understand complex content like policy, bills, and even complex legal theory. It will finally give us the ability to be informed and objective citizens without relying on the bias of media organiztions.

This is an example of a policy analysis tool I developed, focused on the 900+ page proposal from the Heritage Foundation to overhaul the federal bureaucracy that will analyze the document.*

An important caveat, this is only a prototype. After hours of training the document, it was too big for the tool to accurately access the correct documents. I will post more about this challenge because it’s part of a larger experiment I’m conducting to understand the memory, navigation, and structuring of GPTs to maximize it’s knowledge base.

Part 3: “If you don’t read the news then you're uninformed. If you read the news then you’re misinformed…”

Thanks, Denzel for the inspiration.

Improving media literacy has and will continue to be a major concern across the world. And in 2024, with over 50 democracies across the world set to have elections, this will be more important than ever. With the proliferation of GenAI in the mix of all this, misinformation is about to move into uncharted territory.

I believe that GenAI tools like OpenAI's GPTs will allow us to combat the erosion of trust in information. With this in mind, I created MediaVetter. https://lnkd.in/esZPUp59

With MediaVetter, users simple paste a link to any article, blog, etc. and MediaVetter will:

✅ Provide a brief, two-sentence summary of its main points.

✅ Present a three-point synthesis of the article's context and significance.

✅ Evaluate the credibility of the publication where the article is from.

✅ Assess the factual accuracy of the article, pointing out any incorrect information, if present.

✅ Analyzes the article for bias based on the language and keywords used.

✅ Recommends and links three related articles from high-credibility sources, providing a brief synopsis for each, explaining how their perspectives might differ from the original article.

What’s Next?

The rapid evolution of GenAI presents a promising solution to the ever-growing challenges of information overload and misinformation. By leveraging the advanced capabilities of tools like the ones we discussed, we can see how the near future could open the path towards a transformative era in information management, analysis, and curation. These tools promise to streamline our interaction with complex and voluminous data and anhance our understanding and decision-making abilities.

As we navigate this era of abundance of content, the ability of GenAI to synthesize, analyze, and curate information tailored to our individual needs is not just a convenience; it's a necessity. The key challenge now, is to understand the potential ecosystem and products that will be developed to enable a more frictionless way for us to interact with these tools, something I hope to explore in the coming months.