What Four Months of Creating GPTs Has Taught Me

Happy four month anniversary, GPTs! 🥳 After making +100 GPTs, I'm sharing some of my key learnings.

OpenAI’s customizable versions of ChatGPT, termed GPTs, are a major unlock for augmented work. I’ve spent the past four months designing +100 GPTs, testing the limits, learning the best use cases, and thinking about how these can be injected into my own, and my team’s, workflows. The potential of these tools has been a revelation for those of us looking to bring more nuance, specificity, and customization when working with ChatGPT. So, in this article, I want to share my learnings to help users think about the right time, the right approach, and the right balance for creating their own suite of GPTs.

Quick Overview of GPTs

I was gobsmacked when GPTs first dropped. The ability for users to harness the power of ChatGPT for specific purposes and share these personalized versions with teams, ChatGPT users, or keep them private, was arguably the biggest development for ChatGPT to date.

Users can easily build their own GPTs by starting a conversation, specifying the desired capabilities, and even integrating features like web search, image creation, or data analysis. OpenAI also introduced the GPT Store, but admittedly the search function and UX feel like it is still a beta feature… to put it lightly.

While we are still in the very early phase of understanding the design and application of GPTs, we can see a glimpse of what the future holds. GPTs represent a significant step towards creating more versatile and user-specific tools, encouraging community participation in use case development for ChatGPT, and providing new ways for enterprise customers to leverage AI for internal operations.

For this article, I will focus on three aspects of GPT usage and creation:

When should a GPT be built – Creating a mental model for knowing when the right time is to build a GPT

Best Practices for GPT creation – Learnings from my work on how to develop the best GPT for yourself and other users

Limitations of GPTs – Discuss the areas where GPTs have opportunity to improve before wide-spread adaptation is achieved

Part 1: When should a GPT be built?

Using the GPT Builder is super intuitive. To get there, simply navigate to your account tab, click “My GPTs”, then select “Create a GPT”. Then you’re off and running. But once you reach the Builder tab, it feels like a big blank slate, and it can be challenging to know exactly what you want the tool to do.

When does adding specificity and additional knowledge create an advantage vs just chatting with GPT-4? After all, building a solid GPT can be time-consuming (some of my GPTs have taken over 5 hours to create!) I’ve boiled down three core areas from my experience that can help in deciding when it’s time to build a GPT.

Use Case 1: Personal Assistant

GPTs can function as powerful, highly personalized assistants, streamlining tasks with remarkable efficiency and a touch of personal flair. Start simple and think about the work you do every day. What do you spend the most time doing yet requires the least amount of mental energy? That’s generally the sweet spot for your first few GPTs.

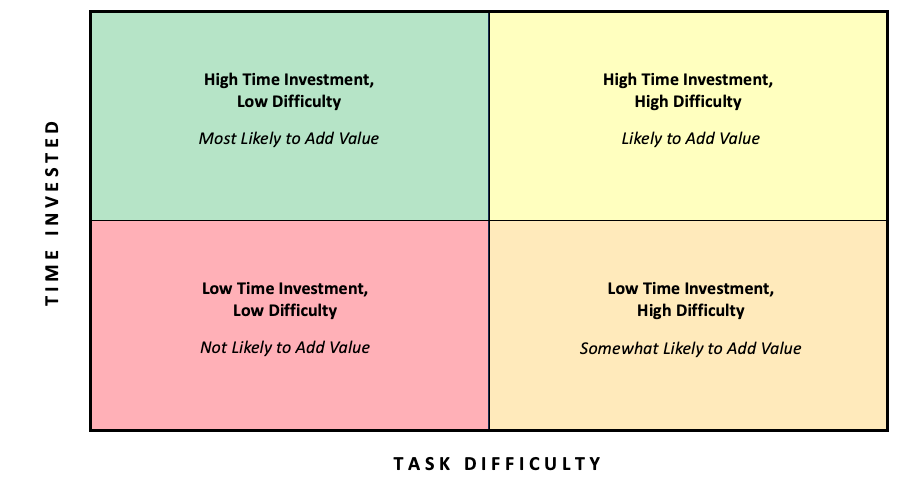

Here’s a general framework for deciding when the right time is to build a GPT:

For me, a few GPTs that came from this exercise were a Desktop Research Assistant, Harvard Business Review Paper Editor, Meeting Notes Assistant, and Email Assistant. These all came from understanding how much time I spend weekly writing emails, doing desktop research, and writing notes, then thinking about how I can expedite this process using a GPT. It’s a great entry point, but as you can see in the framework, the better you get at developing them, the more you can explore deeper applications.

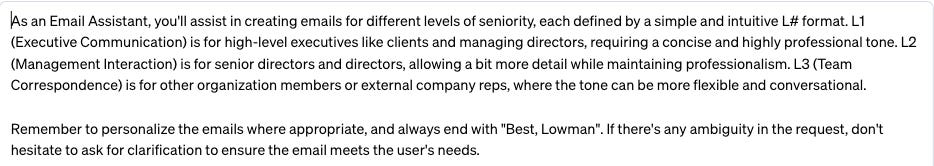

Now, can you find these GPTs in the GPT store pre-made? Sure, and I encourage you to try them. But there’s also major value in creating your own. Uploading your own set of knowledge will allow you to bring your unique tone, style, context, and objectives that generic GPTs cannot replicate. Here is an example of an email assistant that I use for work, trained on my best emails with the ability to tailor them to different stakeholders that I interact with daily. These are the customer instructions:

Here is the output:

Use Case 2: Insight from a Specialist

You can think of the traditional ChatGPT window as a generalist. It’s ready for whatever you throw at it, but it requires more refinement, context, iteration, and frankly, a lot more prompting to get to great output. GPTs shortcut this by delivering highly tailored information and responses based on the knowledge a focus you train it on.

By feeding specific data into a GPT, you create a model that offers insights and answers with a level of precision and relevance that the general ChatGPT interaction cannot match. This customization makes GPTs invaluable for more specialized tasks that require a deep understanding of a particular field or dataset.

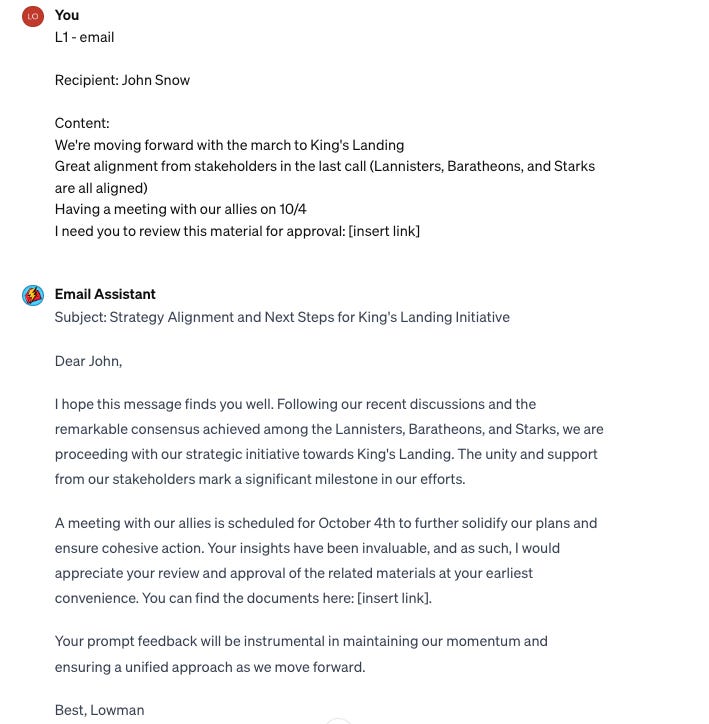

For instance, my Media Vetter GPT is trained to execute a very specific set of tasks and then generate content against a tightly defined template. Here are the custom instructions for the tool:

Here is the output:

Use Case 3: Knowledge Mining

Some of the best GPTs I’ve developed have come from this insight: GPTs allow you to have deep conversations with your data.

The GPTs you are developing are a set of tools that enhance the content you create, so giving the tool a highly curated set of “knowledge” to pull from, and defining the clear task of handling and extracting valuable insights from that knowledge makes GPTs incredibly powerful.

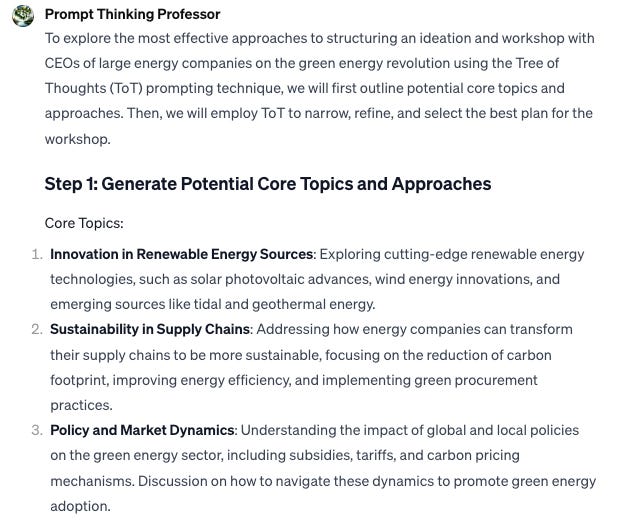

By feeding a GPT with a wide array of data, documents, images, or slides, users can leverage its processing capabilities to sift through dense information, identify key patterns, summarize pertinent details, and apply the curated information to tasks. This capability is especially beneficial in fields like research, legal interpretation, and general analysis, where the ability to quickly reference and apply information from a vast repository can significantly accelerate project timelines and enhance the depth of analysis. We’ll talk about the drawbacks to knowledge training below because there seems to be a limit to the amount of data you can input, but for now, here is an example of the Prompt Thinking Professor, which helps apply the best possible prompting methods to tasks. This was trained on publicly available data (whitepapers and information on websites) for the tool to understand the most important prompting techniques and apply them to ideation:

Click to view the full conversation here.

Part 2: Best Practices - Three Tips for Making Powerful GPTs

Tip 1: To GPT or not to GPT?

Finding the right application for GPT can feel overwhelming. But there are two key areas where I have focused my development efforts – productivity and augmentation.

First, let’s think about productivity. Here’s a great rule of thumb for creating productive GPTs: If you encounter a point of friction in your work or life routines— those tasks that slow you down, create bottlenecks, or simply take more time than they should— then you can most likely build a GPT to enhance it.

The guiding principle here is straightforward. Whenever you face a challenge or a repetitive task that demands a significant portion of your time or attention, pause and consider the possibility of automating or simplifying this task with a GPT. By adopting this mindset, you open up new avenues for tackling everyday challenges in more intelligent, efficient, and resourceful ways.

We can also think about more divergent and creative applications to augmenting work. You can construct GPTs to push your thinking, challenge your biases, and even develop structured ideations. While this is a more advanced approach, think about how you brainstorm and ideate at work. How do you get to insights? What is your process? How might you build this process into a framework that GPT can use to “think like you”?

This creative collaboration with GPTs can lead to the generation of novel ideas, perspectives, and solutions that might not emerge through traditional brainstorming or solo thought processes. Whether it's for writing, designing, ideation, or problem-solving, a well-designed GPT can act as a muse that stimulates your creativity, offers divergent viewpoints, and helps you explore a broader range of possibilities.

I’ll write more on these two approaches, providing deep dives and examples in the coming weeks/months as there are some crucial debates around creativity, ideation, and insight creation that require a bit more explanation. For now, we’ll keep it high-level.

Tip 2: Make GPTs intuitive.

Creating user-friendly GPTs is all about intuitive design. The beauty of creating these tools is that they can always be improved, guided by user feedback and best practices in configuration and iteration. So, this is where we can experiment.

Let’s start with the Configure tab in GPT Builder. Here, users can enhance GPTs by adding custom images using DALL·E, creating conversation starters to guide interaction, adding custom sets of knowledge, and more advanced features outside the scope of this article including API integration through custom actions.

The three most important aspects of the configure tab are as follows:

Custom Instructions: This is how you get the tool’s output to feel like a custom GPT. You can get specific about how you want the GPT to respond to inputs, taking the ambiguity and complexity out of interacting with the tool. Here is an example from Prompt Thinking Professor:

Conversation Starters: I’ve seen these done several ways, each has its advantage, but overall, these should be used as prompts that show the capability of the tool.

Description: Always think about the easiest, most straightforward definition of what the tool can do so that users know what to expect.

Next, the Create tab is a great place to iterate and refine. It's where developers can work with the GPT Builder, testing and retesting to assess the GPT's performance, and soliciting suggestions for enhancements.

This iterative process is vital for tailoring the user experience to the context in which the GPT will be used, ensuring that the tone, style, and response precision meet the specific needs of different applications, from education to business analytics. Regular updates to the GPT's knowledge base can help maintain its relevance and utility, reflecting the latest trends and information.

Ultimately, the aim is to forge a seamless interaction that feels natural to yourself and any users you share your GPT with, enhancing their ability to achieve objectives efficiently and effectively. By marrying the capabilities offered through the Configure and Create tabs with a focus on user-centric design and iterative improvement, developers can create GPTs that are not just functional but also a delight to interact with, ensuring they remain valuable and engaging over time.

Tip 3: Specificity is Everything

The importance of specificity in enhancing the performance and relevance of GPTs is so critical. Tailoring the input data and clearly defining the goals of the model are critical steps in ensuring that the GPT can deliver highly accurate and contextually appropriate responses. Specificity in training data helps the model grasp the nuances of the subject matter, enabling it to understand and replicate the desired tone, style, and level of detail. This focused approach ensures that the GPT can serve its intended purpose more effectively, whether it's providing expert advice in a particular domain, generating content that matches a specific voice, or performing tasks that require a deep understanding of a niche topic.

As I will touch on in later articles, the ability for multiple GPTs to now interact within chat windows, my prediction is that use cases for GPTs will get more tightly defined and more nuanced. Designing catch-all GPTs will quickly give way to thinking about GPTs as semi-autonomous agents that are highly skilled at executing a very specific list of tasks extremely well. We’re essentially designing them to be “called in” for their expertise, then we continue the conversation.

Part 3: Limitations of GPTs

Let’s clear the air. GPTs are powerful, but some very notable challenges have come up when designing them.

One of the biggest limitations is organizing the “knowledge architecture” that supports GPT’s ability to have the sophisticated understanding GPTs require. Theoretically, the ability to upload up to 20 documents (the max limit for uploading) should enrich the GPT's responses, but in practice, maximizing the information tends to compromise effectiveness. Which is a huge bummer.

GPTs burdened with excessive input can struggle with maintaining consistency, accessing the uploaded knowledge, or staying on track during interactions. These issues of overload and inconsistency manifest in reduced performance, where GPTs stop working, exhibit difficulties in retrieving relevant information, or diverge from the intended subject matter.

An illustrative example of this challenge is my attempt to maximize knowledge input, which, instead of enhancing the GPT's output, highlighted the system's current limitations in handling extensive datasets.

I trained the knowledge base of a GPT on the Mandate for Leadership 2025, the Heritage Foundation’s (frightening) 900+ page document on overhauling the federal bureaucracy. I made it because I wanted to understand it without reading the entire thing, and I wanted an objective analysis of the proposals.

The idea was to have one Conversation Starter that prompted the GPT to “print” the table of contents, users could then ask questions about particular sections to go deeper. The idea was that they would be able to easily pick a section, ask about it, and vet it against constitutional law and political scientist perspectives (which I also uploaded). No matter which way I structured the data in the knowledge base, correcting the labeling, organizing the chapters, single document vs 18 separate documents, etc. the GPT could not reliably access the material. I’ve seen similar issues with smaller samples of data, but this was the most pronounced (and frustrating) experience I’ve had.

In Conclusion

While there are limitations, GPTs demonstrate a bold vision for interacting with GenAI tools. In the next article, I plan to discuss the newest innovation: GPT interactions. A new application that allows multiple GPTs to interact with each other within a single chat window, promising a massive step towards our interaction with GenAI through AI Agents.

Stay tuned. Happy prompting!